Diurn.ai : The instagram page that summarizes the news

Introduction¶

In the era of internet, news are everywhere and it has become trivial to be aware of an event that has just happened at the other side of the globe. However, this huge quantity of information is received in fragments, and is poorly decrypted. As a result, it becomes hard to distinguish the import events from the lesser news. Being aware of the news almost instantly is crucial and paradoxally, with the decline of the print media, less time is taken to do so and the process often consist in reading the headlines. To tackle this issue, several platforms/media promise their users to send them only the important news and some even offer a daily summary. This work is done by professionals (journalist) who decide what has to be summarized.

But what if this process was done automatically ? What if an algorithm was able to retrieve the hot news and condense them, assuring reliability and impartiality ?

With the recent advances in the Deep Learning field, Neural Language Processing is becoming more and more powerful and accessible. The summarization task - hard for machines but also for humans - is starting to give satisfactory results thanks to state of the art attention deep learning models.

To learn more about the recents advances in NLP, I thus decided as a personal project to tackle the summarization issue by implementing an algorithm that would scrap the news and resume it in an Instagram post. Why Instagram ? Because it is a popular social network particularly used by activists which proves that beyond posting photos of cute dogs or hollidays memories, it can also be used to inform (ideally impartially).

To view the result, browse the @diurn.ai account on Instagram.

News Scrapping¶

To retrieve the articles' text, I use the powerful Newspaper3k library. With only an article's URL, it outputs the title and the body of the article.

The articles that are chosen are for the moment the 3 main articles in the World news category on Reuters.com. To get the URLs, I use the Beautiful Soup library as a scrapping method.

The choice of using Reuters as a news source is arbitrary and an improvement could be to retrieve the trending articles from different sources.

Article summarization¶

Overview of Natural language Processing¶

Note : this part is intended to present an overview of text summarization using Deep Learning methods but should not be consider as a course/tutorial. To learn about NLP with Deep Learning, I suggest following the great Stanford CS 224N course.

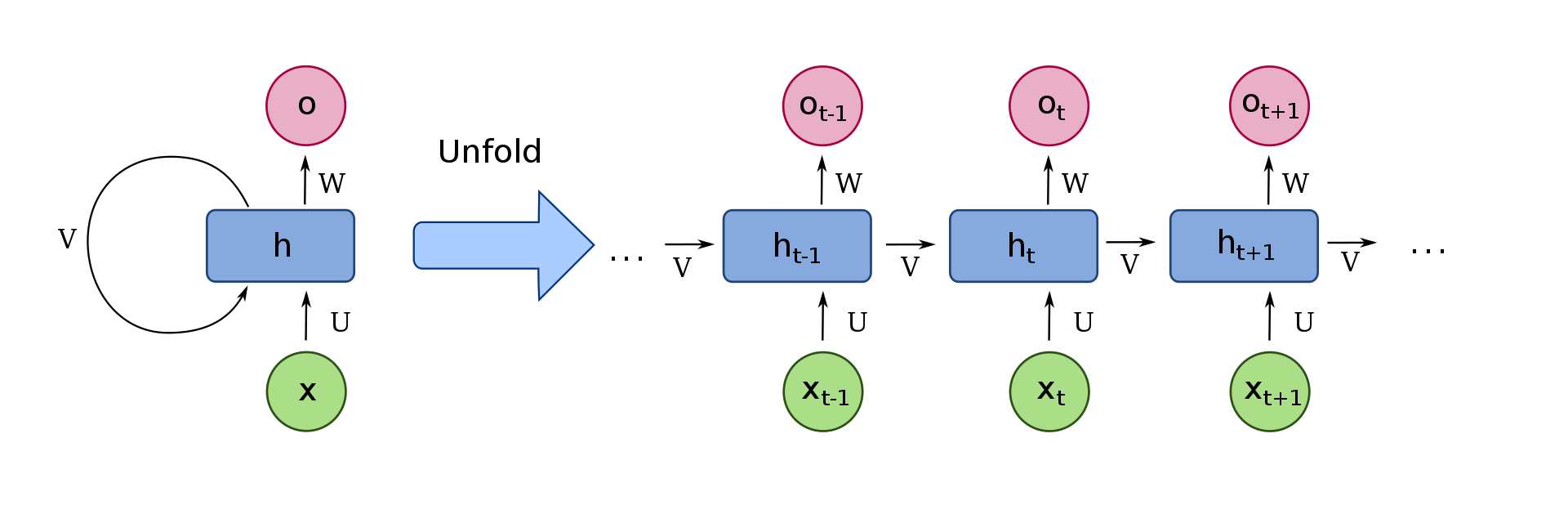

Recurrent Neural Networks (RNN)¶

RNN is ageneralization of feedforward neural networks to sequences. Givent a sequence of inputs $[x_1,...,X_T]$, a RNN compute the sequence of outputs $[o_1,...,o_T]$ by iterating : $$h_t = \sigma(Ux_t + Vh_{t-1})$$ $$o_t = Wh_t$$ where $\sigma$ is the activation function

This architecture allows it to exhibit temporal dynamic behavior and is particularly useful for domains such as speech recognition. However, it is not clear how to apply RNNs to problems with inputs and outputs of different length.

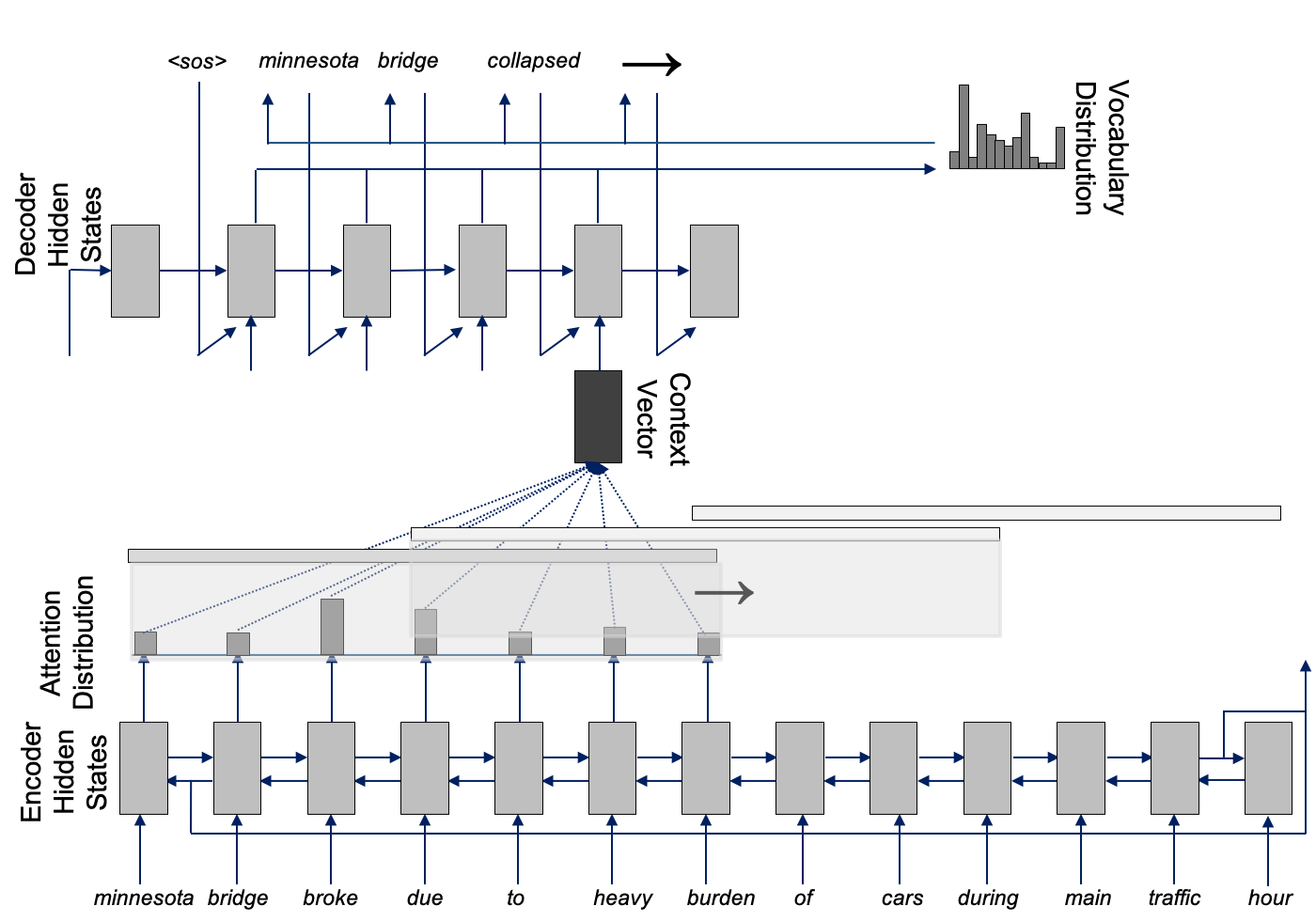

Sequence to Sequence models (seq2seq)¶

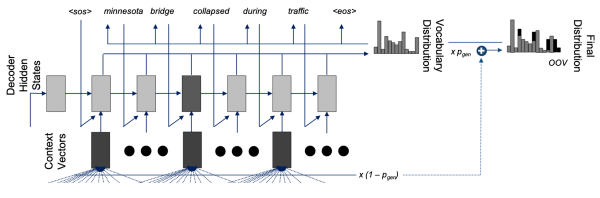

Seq2seq models are a class of Reccurent Neural Networks (RNN) models used to solve complex NLP tasks. In a seq2seq model used for abstractive summarization, a RNN is trained to map an input sequence $[x_1,x_2,...,x_{n_x}]$ of word vectors to an output sequence $[y_1,y_2,...,y_{n_y}]$ . This case is more general and it is the one in which we are when we want to do text summarization. It is more complex because it requires to know the entire input sequence before starting to predict the output sequence. Here is how it works :

- A RNN layer acts as an encoder : it processes the input sequence and returns its own internal state. Only the state will be used as "context", the outputs of the RNN are discarded

- Another RNN layer acts as a decoder : it is trained to turn the target sequences into the same sequences but offset by one timestep. Effectively, the decoder learns to generate $y_{t+1}$ given $y_t$ conditioned on the input sequence.

In the case of text summarization, seq2seq model can be represented as follows :

|

|

|

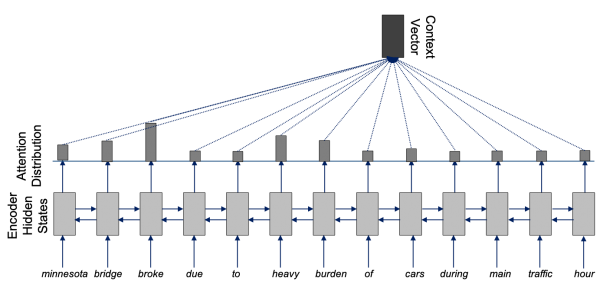

Attention Mechanisms¶

Initially designed in the context of Neural Machine Translation using Seq2Seq Models, Attention Mechanisms have gained popularity these last years thanks to the great results they provide in multiple domains. They are used to model long-range interaction, which is particularly useful in NLP. The key idea is to build shortcuts between a context vector and the input, to allow a model to attend to different parts.

The decoder uses the → to signal when it wants to move to the next window

The decoder uses the → to signal when it wants to move to the next window

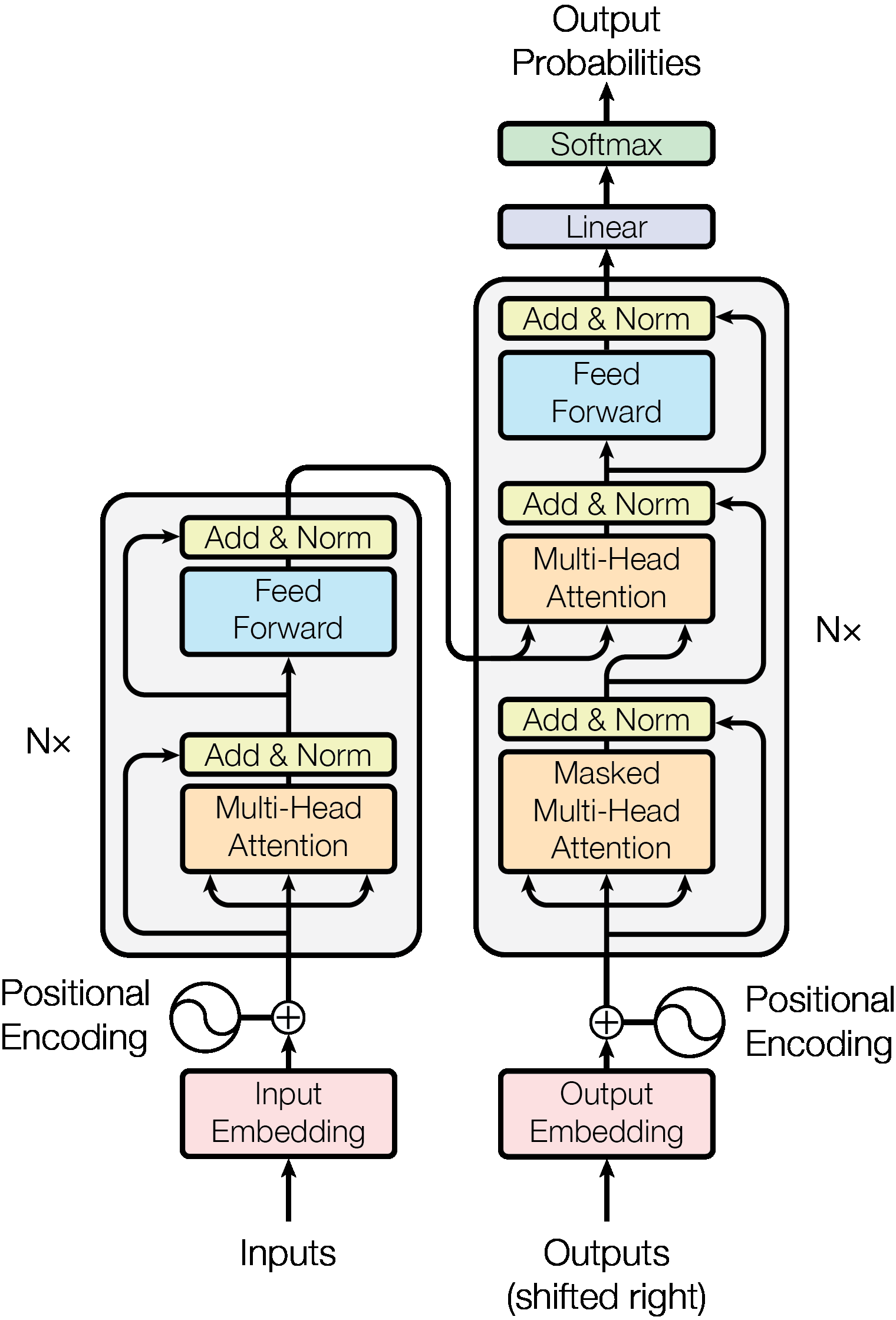

Transformers¶

Introduced by Vaswani et al. in Attention Is All You Need, Transformers are models with an architecture that relies entirely on an attention mechanism to draw global dependencies between input and output instead of using recurrent models. A great benefit is that it enables more parallelization than methods like RNNs and CNNs.

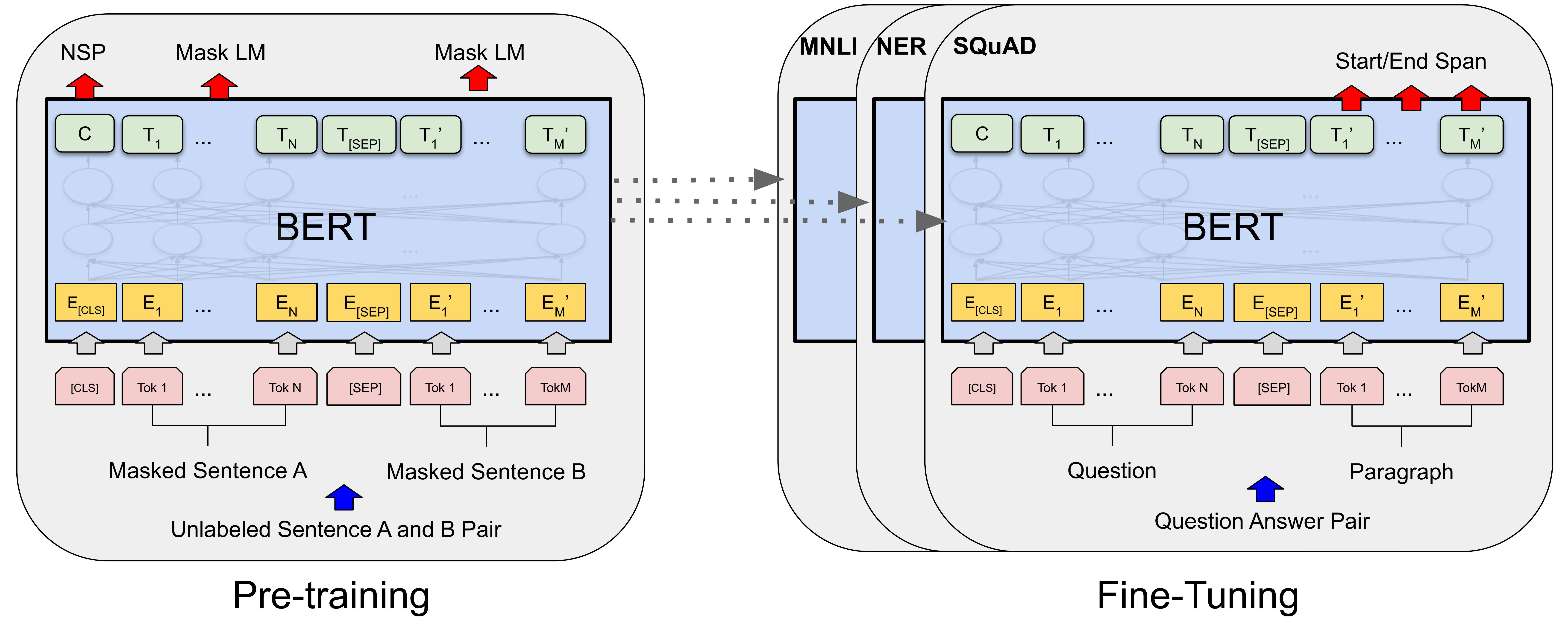

BERT¶

Bidirectional Encoder Representations from Transformers is an improvement of standart Transformers. It removes the unidirectionality constraint by using a Masked Language Model (MLM) pre-training objective. The MLM randomly masks some tokens from the input and the goal is to predict the original vocabulary id of the masked words based on its context. Unlike unidirectional language model pre-training, MLM objective enables the representation to take into account both left and right context, allowing us to pre-train a deep birectional Transformer. In addition to the MLM, BERT uses a Next Sentence Prediction task that pre-train text-pair representations.

The training of BERT is done in two steps :

- Pre-training : the model is trained on unlabeled data over different pre-training tasks;

- Fine-tuning : initialisation is done with the pre-trained parameters, then all the parameters are fine-tuned using data from the downstream tasks (MNLI, NER...) ; each having its own separate fine-tuned model.

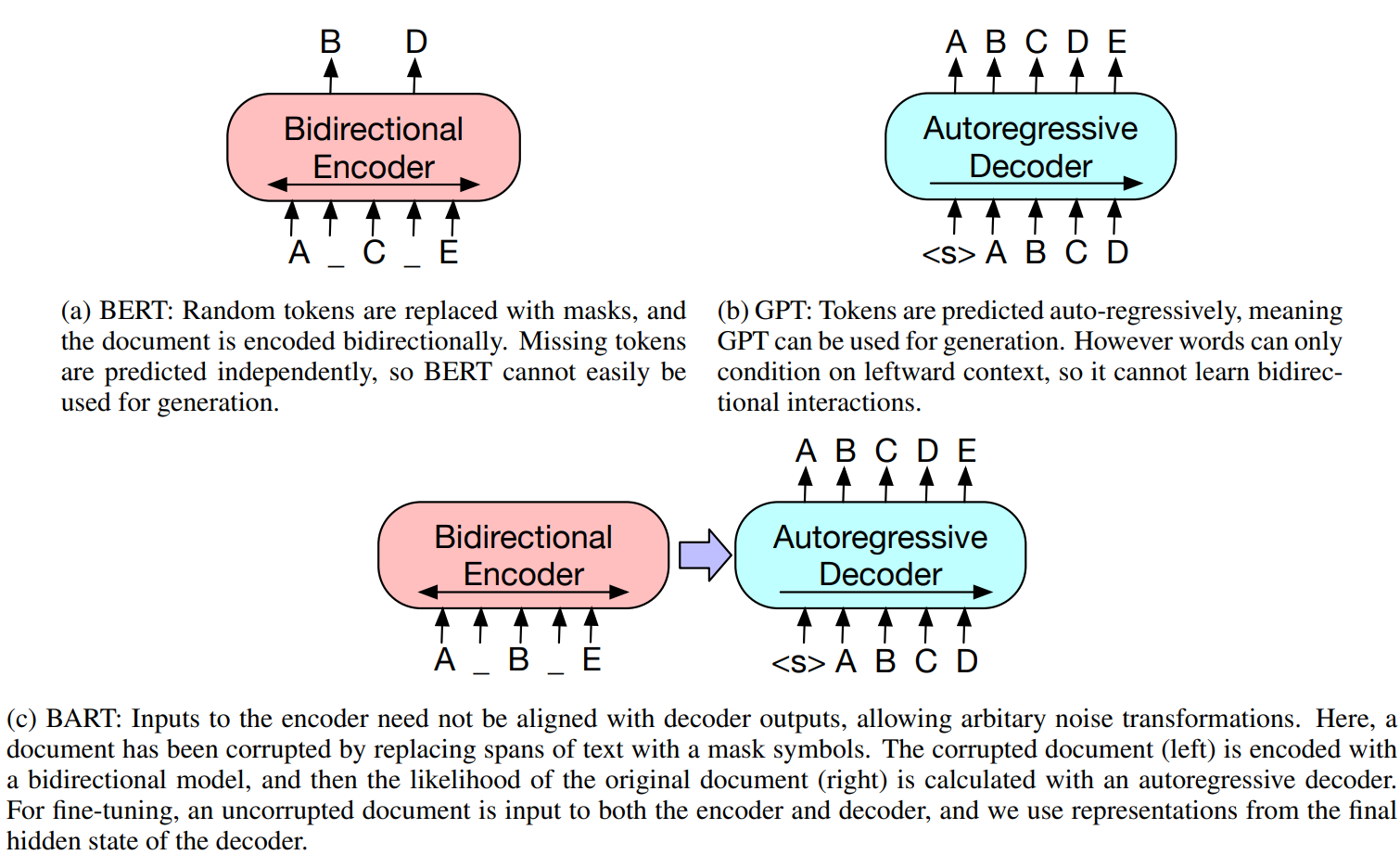

BART¶

For this summarization task, I have used the BART model, recently proposed by the Facebook AI research team. It has proven state of the art results in several NLP tasks, and in particular in text summarization.

Results¶

The results given by the BART autoencoder are from my point of view really impressive. Here is an example :

Input article

Prime Minister Mark Rutte announced the resignation of his government on Friday, accepting responsibility for years of mismanagement of childcare subsidies, which wrongfully drove thousands of families to financial ruin.

Rutte said he had handed his resignation to King Willem-Alexander. The cabinet would remain in place in a caretaker capacity to manage the coronavirus crisis for now, with an election already due on March 17.

The resignation follows a parliamentary inquiry last month that found bureaucrats at the tax service had wrongly accused families of fraud.

The inquiry report said around 10,000 families had been forced to repay tens of thousands of euros of subsidies, in some cases leading to unemployment, bankruptcies and divorces, in what it called an “unprecedented injustice”.

Many of the families were targeted based on their ethnic origin or dual nationalities, the tax office said last year.

Orlando Kadir, an attorney representing around 600 families in a lawsuit against politicians, said people had been targeted “as a result of ethnic profiling by bureaucrats who picked out their foreign-looking names”.

The crisis comes with the Netherlands in the midst of the toughest lockdown of the COVID-19 pandemic, and Rutte considering even more curbs as infections surge.

Even though public support for the government’s COVID-19 measures has dipped sharply in recent weeks, Rutte’s People’s Party for Freedom and Democracy (VVD) is still riding high in public opinion polls ahead of the March resultselection.

Rutte’s party is projected to take just under 30% of the vote, more than twice that seen going to the second placed PVV, the anti-Islam party of Geert Wilders. Rutte has served since 2010, having won re-election twice.Output Summary

Prime Minister Mark Rutte accepts responsibility for mismanagement of childcare subsidies. Thousands of families forced to repay tens of thousands of euros of subsidies, leading to unemployment, bankruptcies and divorces. Inquiry last month found bureaucrats at the tax service had wrongly accused families of fraud. Rutte has served since 2010, having won re-election twice.The result is of course not perfect and some elements are missed, while there are some sentences that are not reformulated.It also lacks a bit of linking words. But overall, the summary is understandable and gives a pretty good glimpse of what the article is about!

Instagram Post Automation¶

Creating the Image¶

Once the daily news is retrieved, we have to turn it into a image with a caption. The image will be composed of the title of the main article, with as background an image from Unsplash that is supposed to refer to the article's theme. To do so, the steps are :

1. Finding the main article's theme :

I retrieve the 2 tokens that occur the most in the article, without including the stopwords ("the", "a", "are", "we"...). This method is probably not optimal but give fair enough results.

2. Retriving the corresponding image :

Unsplash proposes a free photo API that enables to retrieve photos with a simple GET request. Creating a developper profile on the website is only needed and with few code lines in python we can get the wished photo :

r = requests.get('https://api.unsplash.com/search/photos?query='+theme+'&page=1&per_page=30&client_id='+client_id)

data = r.json()

photo = data['results'][0]['urls']['raw']

3. Building the image :

Finally, to build the image, I have used the Python PIL library. It contains several functions to modify a picture and add text with a desired font over it.

Posting on Instagram¶

In its battle again the bots of fake accounts, Instagram has made it really hard to automate task with code. One of the last library that still successfully enables us to post on Instagram is Instabot. Basically, it uses Selenium to automate some tasks on the application.

Posible future improvements¶

- Diversifying the news' source

- Classifying the article's topic

- Covering other topics (e.g. Sport results)

- Try to post Stories (even if it seems impossible now with the powerful anti-bot algorithms)